S

Sqrt2

Guest

A question that crossed my mind was "can smartphones like the S24U actually resolve their 200MP sensor?" and led me down a path of investigating diffraction and resolvable megapixels.

A Diffraction-Limited System™ is any optical device, but for this purpose a camera, whose lens is sharp enough and whose sensor's megapixels are high enough, that the only limiting factor for resolution is diffraction - the phenomenon of light being distorted and bent when moving through an opening - an aperture.

The resolvable detail of such a device is determined by the size of the Airy Disc - the diffraction pattern - on each individual photosite/pixel. If the diameter of the Airy Disc is larger than the pixel pitch, the light from each pixel will blend into its surrounding pixels, softening detail, and reducing resolution.

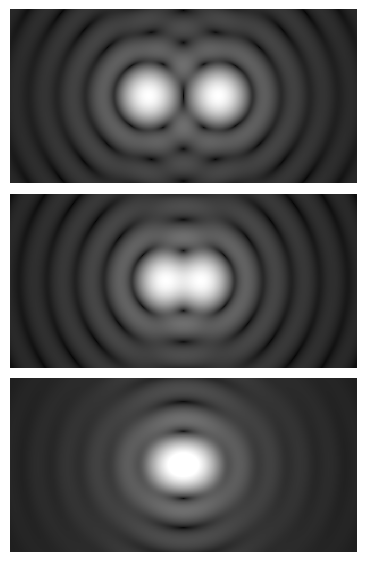

An Airy DIsc, the product of the diffraction of light through an aperture.

Therfore, the smallest pixel pitch that can be resolved is that of the Airy Disc's diameter.

According to Wikipedia, the diameter of the Airy Disc is given by:

d/2 = 1.22 * L * N

or

d = 2.44 * L * N

where,

d is the Airy Disc's diameter,

L is the wavelength of light, and

N is the F number of the lens.

As for the wavelength, we are dealing with visible light, whose wavelength varies between 0.38 and 0.75 µm, but as an approximation we can use the middle of the range, the colour green, whose wavelength is 0.5 µm.

This gives us an Airy Disc's diameter, and a pixel pitch, of

d = 1.22 * F

where F is the F number.

Next, to calculate the number of megapixels resolvable, given the sensor area A and the pixel pitch D, we can simply divide the sensor area by the the area of each pixel (which is the pixel pitch squared):

MP = A / (D^2)

Notice the pixel pitch is measured in µm, but the sensor area is measured in mm^2. We would need to account for a conversion factor of 10^6 here, if not for the fact that a megapixel is 10^6 pixels, so they cancel each other out. Neat.

We can substitute the first equation in the second, and get the maximum resolvable MP for a given sensor area and F number:

MP = A / (1.22*F)^2

where A is the sensor area in mm^2 and F is the F number of the lens.

As for the sensor area, first let's start with a few sensor sizes used in ILCs:

Full Frame = 864 mm^2

APS-C = 370 mm^2 (or 330 mm^2 for Canon)

MFT = 225 mm^2

And here are some sensor sizes common in smartphones today:

1-Type = 116 mm^2 , common in high-end compacts from Canon, Sony, and Panasonic, and appearing in the latest flagship smatphones from Sony, Vivo, and Xiaomi.

1/1.3 = 69 mm^2 , as seen in the Samsung Galaxy S24 Ultra.

1/1.5 = 49 mm^2 , as seen in the Samsung Galaxy S24+, and the Asus Zenfone 10.

1/2.3 = 28.5 mm^2 , as seen in many superzoom compacts

Now, as for the aperture, according to GSMArena, The Zenfone 10's aperture is f/1.9 and the S24 Ultra's aperture f/1.7 , so we can approximate most smartphone main shooters' apertures as f/1.8 , which lets us calculate the maximum number of resolvable megapixels:

MP = A / 4.82

for each sensor size I listed above, the values are:

Full Frame = 180MP

APS-C = 77MP (63MP for Canon)

MFT = 47MP

1-Type = 24MP

1/1.3 = 14MP

1/1.5 = 10MP

1/2.3 = 6MP

From this we can see that while ILCs can easily resolve over 40MP, a smartphone's sensor would struggle to resolve even 24MP, let alone 50MP or 200MP.

No wonder even the best smarphone cameras are very bad at resolving fine detail, as seen here .

If so, why do smartphone manufacturers insist on cramming more megapixels than they can possibly resolve? Just for marketing? For computational photography of some sort?

As a bonus, let's do the same calculations for the ultra-wide and the 5x telephoto cameras of the S24 Ultra:

UW:

1/2.55-Type, 24.7 mm^2, f/2.2 = 3.43MP

5x telephoto:

1/2.52-Type, 24.7 mm^2, f/3.4 = 1.43MP

Is it just me or are these really low? That would mean these can merely resolve a 2MP 1080p video frame.

I want to ask, is there somewhere a mistake in my calculations? Please let me know in the replies!

A Diffraction-Limited System™ is any optical device, but for this purpose a camera, whose lens is sharp enough and whose sensor's megapixels are high enough, that the only limiting factor for resolution is diffraction - the phenomenon of light being distorted and bent when moving through an opening - an aperture.

The resolvable detail of such a device is determined by the size of the Airy Disc - the diffraction pattern - on each individual photosite/pixel. If the diameter of the Airy Disc is larger than the pixel pitch, the light from each pixel will blend into its surrounding pixels, softening detail, and reducing resolution.

An Airy DIsc, the product of the diffraction of light through an aperture.

Therfore, the smallest pixel pitch that can be resolved is that of the Airy Disc's diameter.

According to Wikipedia, the diameter of the Airy Disc is given by:

d/2 = 1.22 * L * N

or

d = 2.44 * L * N

where,

d is the Airy Disc's diameter,

L is the wavelength of light, and

N is the F number of the lens.

As for the wavelength, we are dealing with visible light, whose wavelength varies between 0.38 and 0.75 µm, but as an approximation we can use the middle of the range, the colour green, whose wavelength is 0.5 µm.

This gives us an Airy Disc's diameter, and a pixel pitch, of

d = 1.22 * F

where F is the F number.

Next, to calculate the number of megapixels resolvable, given the sensor area A and the pixel pitch D, we can simply divide the sensor area by the the area of each pixel (which is the pixel pitch squared):

MP = A / (D^2)

Notice the pixel pitch is measured in µm, but the sensor area is measured in mm^2. We would need to account for a conversion factor of 10^6 here, if not for the fact that a megapixel is 10^6 pixels, so they cancel each other out. Neat.

We can substitute the first equation in the second, and get the maximum resolvable MP for a given sensor area and F number:

MP = A / (1.22*F)^2

where A is the sensor area in mm^2 and F is the F number of the lens.

As for the sensor area, first let's start with a few sensor sizes used in ILCs:

Full Frame = 864 mm^2

APS-C = 370 mm^2 (or 330 mm^2 for Canon)

MFT = 225 mm^2

And here are some sensor sizes common in smartphones today:

1-Type = 116 mm^2 , common in high-end compacts from Canon, Sony, and Panasonic, and appearing in the latest flagship smatphones from Sony, Vivo, and Xiaomi.

1/1.3 = 69 mm^2 , as seen in the Samsung Galaxy S24 Ultra.

1/1.5 = 49 mm^2 , as seen in the Samsung Galaxy S24+, and the Asus Zenfone 10.

1/2.3 = 28.5 mm^2 , as seen in many superzoom compacts

Now, as for the aperture, according to GSMArena, The Zenfone 10's aperture is f/1.9 and the S24 Ultra's aperture f/1.7 , so we can approximate most smartphone main shooters' apertures as f/1.8 , which lets us calculate the maximum number of resolvable megapixels:

MP = A / 4.82

for each sensor size I listed above, the values are:

Full Frame = 180MP

APS-C = 77MP (63MP for Canon)

MFT = 47MP

1-Type = 24MP

1/1.3 = 14MP

1/1.5 = 10MP

1/2.3 = 6MP

From this we can see that while ILCs can easily resolve over 40MP, a smartphone's sensor would struggle to resolve even 24MP, let alone 50MP or 200MP.

No wonder even the best smarphone cameras are very bad at resolving fine detail, as seen here .

If so, why do smartphone manufacturers insist on cramming more megapixels than they can possibly resolve? Just for marketing? For computational photography of some sort?

As a bonus, let's do the same calculations for the ultra-wide and the 5x telephoto cameras of the S24 Ultra:

UW:

1/2.55-Type, 24.7 mm^2, f/2.2 = 3.43MP

5x telephoto:

1/2.52-Type, 24.7 mm^2, f/3.4 = 1.43MP

Is it just me or are these really low? That would mean these can merely resolve a 2MP 1080p video frame.

I want to ask, is there somewhere a mistake in my calculations? Please let me know in the replies!