I am trying to grasp my head around certain things.

Grasping my head helps me express frustration, but doesn't help much to understand anything.

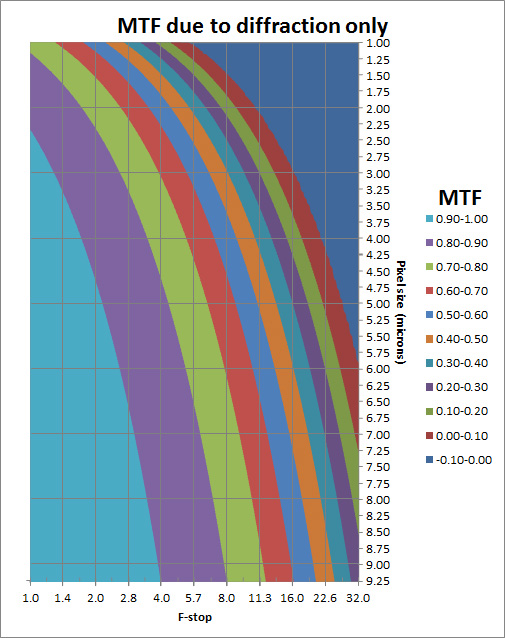

One of them is diffraction, according to Cambridge in colour it is dependent of pixel pitch of the sensor,the aperture of the lens and of course the final size and viewing distance of the print.

One can academically consider diffraction at the pixel level, as in, how big is the blur as measured in units of these specific pixels, but that has nothing to do with practical concerns, when deciding on how much pixel density you want. What people who fixate on pixel-level diffraction tend to overlook is that pixel density does not alter the underlying analog diffraction, but that higher pixel density makes people view each square mm of the sensor area at a larger size, making the same analog diffraction larger. Anyone who takes the same f/22 photo on a 12.5 FF camera and a 50MP FF camera and compares them both at 100% pixel view is a pixel fool, to put it bluntly. Anyone who has a clear head on the subject will look at the 12.5MP at 200%, if they are comparing to the 50MP at 100%. You can also do any other difference of magnification that is 2x, like 140% vs 280%, or 50% vs 100%, but remember that even though 50% makes an image look sharper, it is actually discarding information. Each pair of2x-different magnifications has a set of potential resampling artifacts, but the 50MP will generally have the least artifacts.

What ultimately matters is how big the diffraction blur is in the final display of the image, or in terms of "image" diffraction, the size of the blur relative to the image.

That's not to say that the relationship between diffraction blur size and pixel size

never has any practical meaning. If you are wondering how much you should stop down to get deeper depth-of-field, you are trading off maximum detail sharpness in the center of the focal depth for more depth, and larger pixels might make you lean more towards stopping down, because you can't see the sharp detail loss as much, in terms of sharpness loss, but only because THE LARGER PIXELS WOULD HAVE THROW AWAY A LOT OF THE DETAIL ANYWAY. For any given DOF chosen, however, a higher pixel density would have made the entire image better.

Neglecting the print size and the viewing distance and just focusing on aperture and pixel pitch. Will increased megapixels lead to sooner hitting the diffraction limit?

Do you mean increased pixel density? "Increased megapixels" can mean a sensor of a different size, which brings in other issues. One thing at a time; that's why I used 12.5MP FF vs 50MP FF in my example.

So if you were to jam 60 or 70 megapixels in a full frame sensor wouldn't you at one point reach diffraction very soon?

Pixels shouldn't, ideally, be visible or sharp at all. There should be so many of them that sharp detail can be rendered without seeing pixels, or any pixelation or aliasing.

I think it was some manager from Fujifilm that said, that FF's limit would be around 60mp to 100mp. Was it that that he was referring to?

He doesn't know what he is talking about, unless he is talking about current data/bandwidth/heat concerns, or what the company is willing to produce. A person who truly understands the science of imaging would never say anything like that, regarding maximum IQ. The only problems that would happen, in terms of optics and resolution, is if narrow photosites are too far off-axis, they may have some radial shifts of light or cross-talk into the wrong-colored pixels. The center of the frame would be awesome, guaranteed, with a several hundred MPs, as long as you are not silly enough to conflate image quality with PQ (pixel view quality) on a low-density monitor.

I did some testing with an A7iii and an A7R iii with the same lens of a lens testing chart at various apertures (5.6 to 11). 5.6 where diffraction starts to kick in. When I downsampled the A7R iii image down (both no sharpening) I still found the A7R iii to be sharper at all apertures.

Why is that so?

Why would you expect otherwise? They have the same image-level diffraction, and the denser sensor sampled everything better (more real detail, including more complete color resolution).

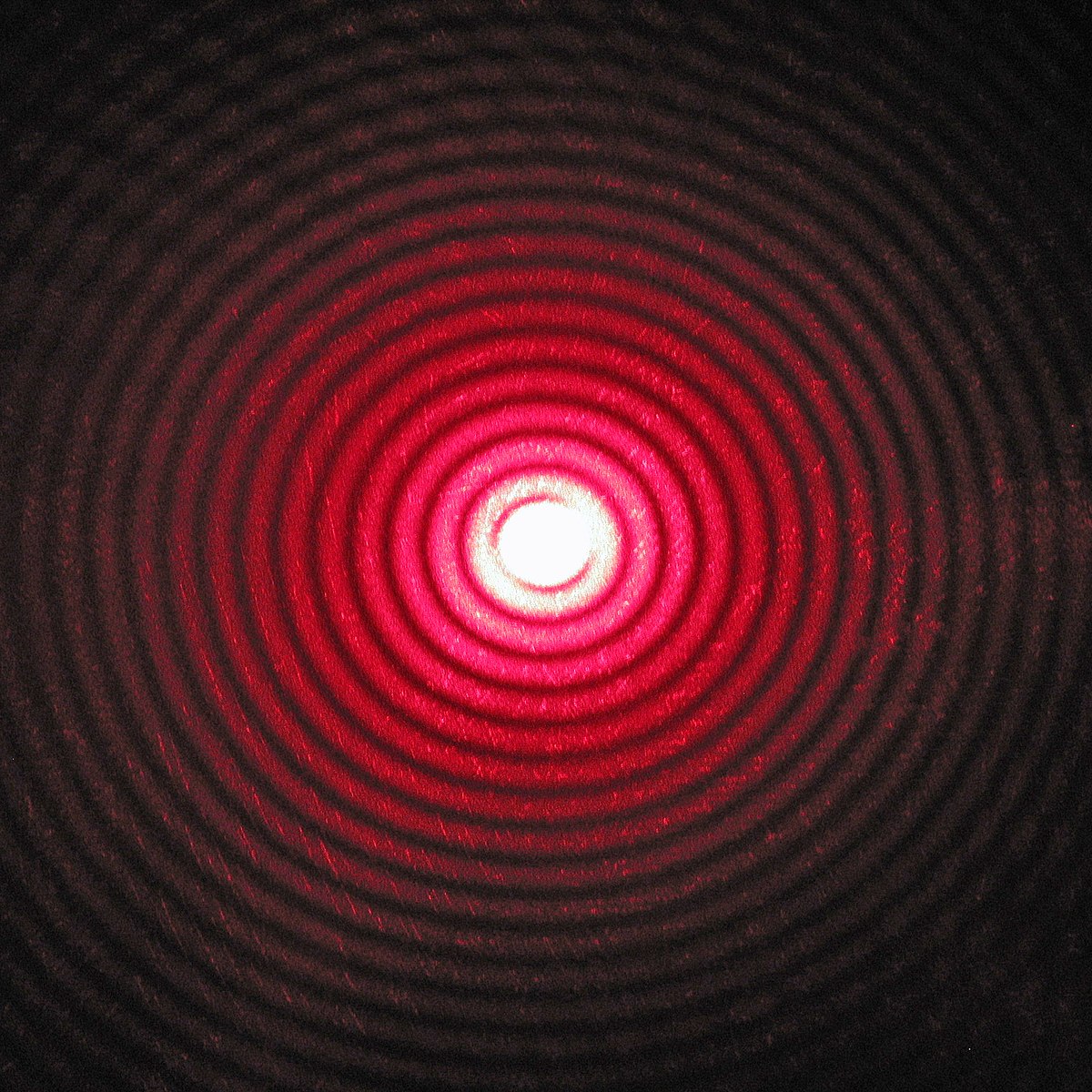

Forget about any idea that diffraction and pixel size have some kind of special relationship. Have you ever seen one of those kitchen tools that takes a slice of something and chops it into little squares? That's what pixels are like. There is an analog diffraction blur on the sensor, and the size of the pixels does not alter that, but just slices it differently. The less little squares or cubes you have, the less detail you have to work with it, and the more distorted it becomes when you resample it.

Is that gain in resolution outweighing the diffraction?

There is no difference in diffraction, relative to the full image.

Or is the jump from 24mp to 42mp just not that big?

You'd get even better results with 100MP.

Or should I have tested at smaller apertures (maybe then I would have seen the difference (I rarely use f16 so I didn't feel I should test it)?

There won't be any detriment for the 42mp, no matter what common high f-number you use, even f/64 with teleconverters.

What do you think? Will 70mp FF cameras even be useful?

Definitely, but not as useful as a gigapixel FF camera. A gigapixel FF camera would allow you to rotate your capture, do perspective distortion, CA correction, etc, without much artifact, and if you choose to downsample to 2MP, your result will be much better than any real 2MP sensor would have done.