Erik Kaffehr

Veteran Member

This is intended to small summary of the aspects going into image quality. The discussion ignores color and things unmeasurable.

The major factors are:

Photons are creating the image

Photons are quantums of light. Light arrives quantums. The pixels capture a significant part of the incoming photons and convert the energy of the photon into a free electron that is collected in the photodiode as a charge. Each pixel can detect a maximum number of incoming photons, that is called Full Well Capacity (FWC). It is an important parameter of the pixel and it is normally measured in electron charges.

Arrival of photons is a random phenomena. So in absolutely even light the distribution of captured electron charges, representing photons, will be a Poison distribution.

The interesting aspect of a Poison distribution is that the standard of deviation for N-samples will be that square root of N.

So, increasing photon count by four will cut noise in half. The practical significance of this is that we want to capture as many photons as possible.

This shows a histogram of a ColorChecker, exposed around 1EV under saturation. Note the highest peak is narrow and the peaks get lower and wider for the darker patches. The widening of the peaks is the noise.

Now, check this ColorChecker that is exposed around 6EV under saturation. The peaks get much wider. The lowest patches and cardboard surrounding the patches float into another.

So reducing exposure, increases noise. This part of the noise is usually dominant. Increasing ISO has no effect on photon statistics.

Photon statistics depend much on sensor size, but not so much on pixel size. How many photons a sensor can detect depends on it's surface and photodiode technology. If that number of photons are distributed ov 25MP or 100MP matters little.

Lens OTF/MTF

The illustrations here are taken from Brandon Dube's article:

https://www.lensrentals.com/blog/2017/10/the-8k-conundrum-when-bad-lenses-mount-good-sensors/

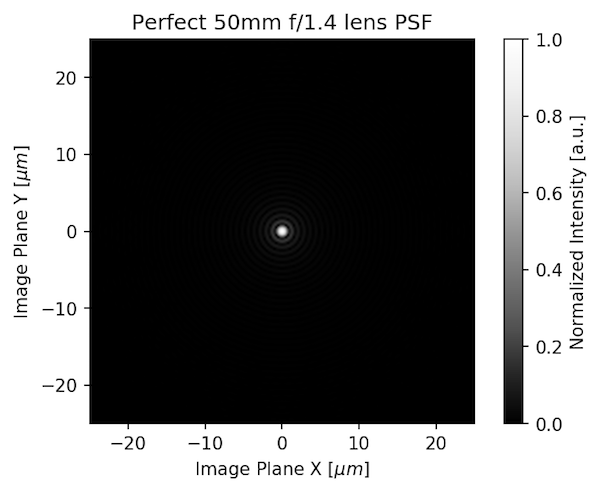

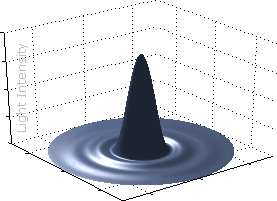

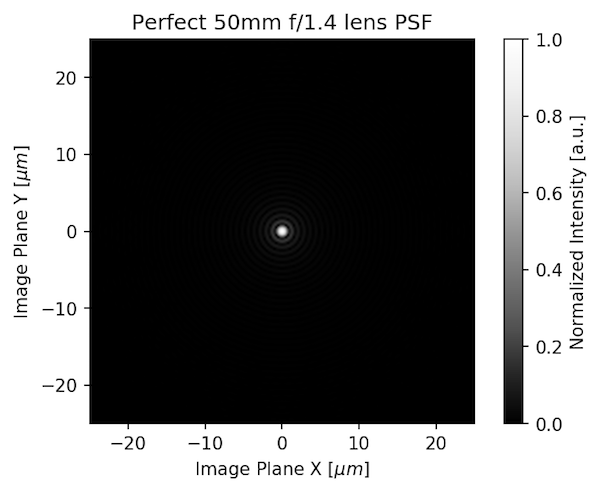

If we shot an image of a very distant small light source, like a distant star trough a perfect lens, it would look like this:

Note the central spot, surrounded by small concentric circles. The small circles are called 'Airy rings' and they are diffraction pattern caused by the aperture of the lens.

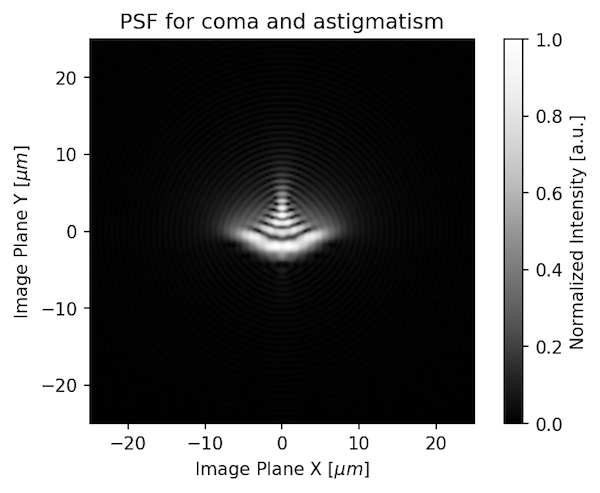

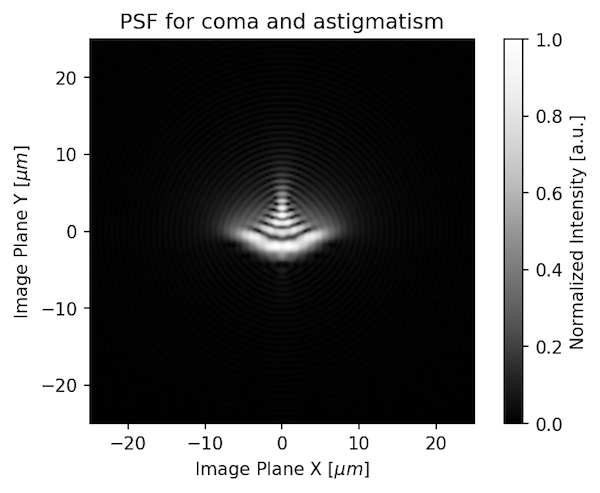

Now, lenses are not perfect, they have aberrations:

This is a lens affected by astigmatism and coma.

Optical engineers try to eliminate these aberrations. Most aberrations increase moving away from the optical axis, and they usually decrease when stopping down.

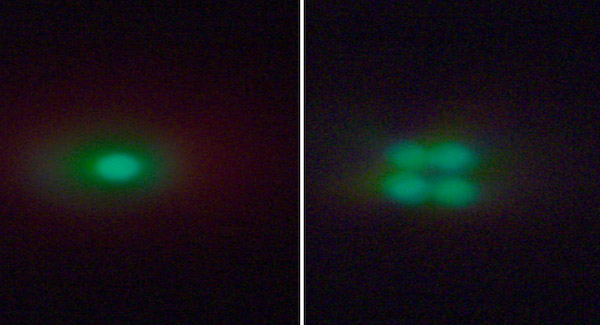

A real spot image may look like this.

Such a spot image could be called a Point Spread Function.

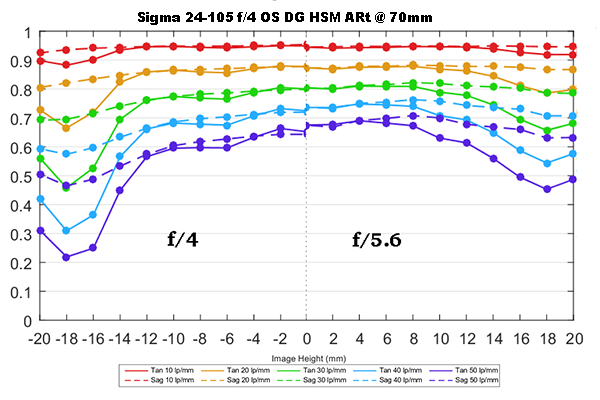

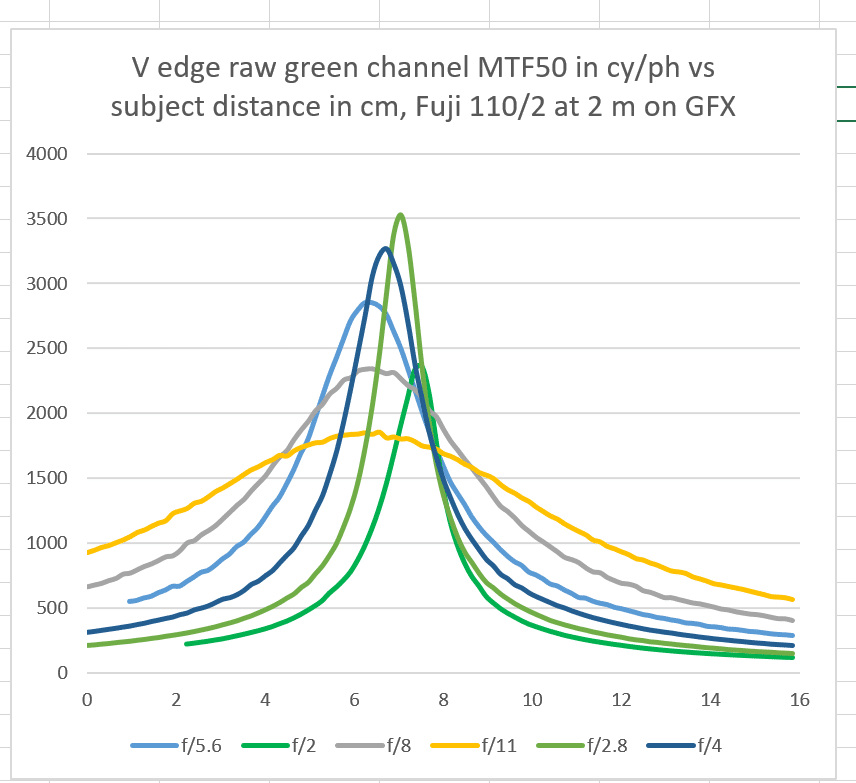

There is a matematical way of describing a PSF called OTF (Optical Transfer Function) that is the 'Fourier Transform' of the PSF. PSF is complex function. Normally lenses are characterized with the absolute value of the OTF. That is called MTF. Both OTF and MTF are two dimensional, but MTF is normally measured in two directions.

Let's take this image:

Two pairs of vertical bars. both equally bright.

Now blur it with a Gaussian blur of radius 5.

We see that both line pairs are blurred, but the large one still keeps contrast while the smaller and tighter one has lost much of contrast.

A mathematician would say that we have convolved the image with a Gaussian PSF having the radius of 5.

Now let's take some real test samples:

This is a small crop of decently sharp image shot on my Hasselblad 555/ELD with a 39M P45+ back at 1:1 view.

This is the same image but shot with a Softar II filter.

The wedges allow us to calculate the MTF for each combo of lens, filter and sensor:

The upper figure shows the MTF. The blue line shows the MTF of the lens and the red one the MTF of the lens + the Softar II.

So, what these curves show how much contrast is lost with varying detail, coarse detail on the left fine detail on the right. The vertical line shows the resolution limit of the sensor.

Resolution is the limit, where we can tell things apart. We can look at fine details of the small resolution chart:

These shows two crops at 200%. Should be viewed at original size below. The structures within the black frame are resolved in both images. But in the left image they are resolved with high contrast and on the left one with low contrast .

After viewing the picture at actual size we can notice that the image on the has color artifacts while the one on the right has low contrast but almost no artifacts.

In this case resolution is limited by the sensor. The MTf we measured was above zero at Nyquist that causes aliasing artifacts.

We could say that the image on the left shows a lens with high resolution and good MTF for small detail while the one the right has good resolution with poor MTF for small details.

It may be said that 'slanted wedges' here are too small and not sharp enough for good MTF calculations, but it may still be a good demo.

OLP filter

As discussed above, if the lens has to much MTF at the Nyquist limit, aliasing will occur. It may seem to accentuate perception of sharpness as it would yield false detail in what normally would be blurred. But with 'Bayer' filters in front of the sensor that false detail arises in different positions for different colors, we can get 'color moiré'.

To reduce that, cameras with large pixels mostly have an Optical Low Pass (OLP) filter. It is normally implemented using four way beam splitter:

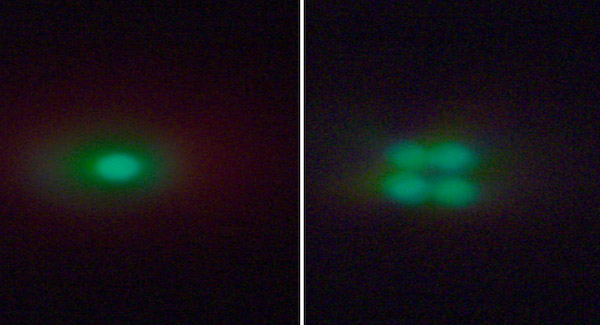

Photograph of a point light source, without an OLP and with an OLP filter that was taken off a sensor.

The OLP filter acts as a softening filter that has most effect on the high frequencies, near Nyquist. OLP filters were optional for some early MFD cameras, but they were expensive and they reduce sharpness, so later MFD-s dropped OLP filtering. (For the Mamiya ZD, the normal IR filter was 1000$US and the OLP filter was 3000$US, I would recall)

Diffraction

Diffraction is a property of light. When light is passing through a hole a diffraction pattern emerges. Here is a nice diffraction pattern from Cambridge in Colour:

The diameter of the Airy pattern, as it is normally called, increasing with f-stop number. So, when we stop down the airy pattern gets larger.

This shows a 3.9 micron pixel grid with an airy pattern for f/5.6. It seems that this may not affect image quality, but even apertures like f/5.6 have a small effect on sharpness.

Stopping down to f/16 will have a significant effect on sharpness.

If we recall MTF, as discussed earlier, each Point Spread Function (PSF) can be approximated by an MTF. The MTF of system are the MTF of it's parts, multiplied. Each part of the system has it's own MTF.

This shows MTF of my Hasselblad Sonnar f/4 CFE at different apertures, on the P45+ back. Quite obviously f/5.6 is best of the apertures tested. Just as an example, it seems that it would outperform my Sony A7rII with my Sony 90/2.8 G at both f/5.6 and f/8. But, it would be a bit behind the Sony at f/11.

By and large, observers mostly don't note diffraction before stopping down to f/16 or f/22. A part of the explanation is that our human vision is most sensitive to low frequencies, like 600 cy/PH and it that range the effect of diffraction is not so large.

Depth of field and defocus

I would think that it is easiest to understand DoF looking at the image plane. This is the best illustration I have seen:

Here we have three objects, one beyond focus distance (1), one at focusing distance (2) and one in front of the focusing distance. All throw a converging beam behind the lens, but only beam from the object in focus converges in the focal plane. The two converge in front or behind the focal planes. So the points out of focus will be blobs or 'bokeh balls'. Normally they would be called circle of confusion (CoC). Closing the aperture cuts down on the diameter of the beam. So the points are not getting in focus, but the 'blob' or 'CoC' gets smaller.

Stopping down the aperture two stops cuts the diameter of 'blob', 'CoC' or 'bokeh ball' in half.

Now, the question arises what is sharp enough, tradition says that a 'blob' diameter of 1/1500 of the image diagonal will be regarded acceptably sharp. If we have a 24x36 mm camera it has an image diagonal of 43 mm. 43/1500 -> 0.0286 mm, that is around 0.03 mm and that figure is used in normal DoF calculations. A 33x44 mm sensor has a diameter of 55 mm so, we would end up with 55/1500 -> 0.037 mm.

But, our sensors may have 4-6 micron size! A 24 MP camera has 6 micron pixels. Our 0.03 mm CoC covers 706 square microns while a pixel is 36 square microns. So 19.6 pixels fit within that CoC. So, acceptable DoF corresponds 24/19.6 -> 1.2 MP.

Would we do the same calculation a 50 MP 44x33 sensor, the figures would be the same.

So, we may need to keep in mind that stopping down doesn't bring things into focus just making the blur smaller.

Stopping down to much, diffraction will come into play and we may need to rise ISO to stop motion,

So, what should we do?

We may need to keep things in balance. To get best image quality, it makes sense to use base ISO and expose as high as possible without burning out highlights.

We would also try to keep aperture near medium, to keep diffraction at bay.

After that we need to manage sharpness. There is only one plane of focus. Placing the main object in focus may make a lot of sense. After that we may need to stop down to get acceptable sharpness.

But stopping down to much we will lose some sharpness and perhaps we need high ISO to keep motion blur at bay.

Best regards

Erik

--

Erik Kaffehr

Website: http://echophoto.dnsalias.net

Magic uses to disappear in controlled experiments…

Gallery: http://echophoto.smugmug.com

Articles: http://echophoto.dnsalias.net/ekr/index.php/photoarticles

The major factors are:

- Photons - that is the light forming the image

- Lens OTF - that is the information that lens transfers to the sensor

- Diffraction - that is the reduction of lens OTF due to the physical diameter of the aperture.

- The OLP-filter - the OLP filter reduces lens OTF to match sensor resolution

- Defocus - the loss of OTF due to the subject being out of focus

Photons are creating the image

Photons are quantums of light. Light arrives quantums. The pixels capture a significant part of the incoming photons and convert the energy of the photon into a free electron that is collected in the photodiode as a charge. Each pixel can detect a maximum number of incoming photons, that is called Full Well Capacity (FWC). It is an important parameter of the pixel and it is normally measured in electron charges.

Arrival of photons is a random phenomena. So in absolutely even light the distribution of captured electron charges, representing photons, will be a Poison distribution.

The interesting aspect of a Poison distribution is that the standard of deviation for N-samples will be that square root of N.

So, increasing photon count by four will cut noise in half. The practical significance of this is that we want to capture as many photons as possible.

This shows a histogram of a ColorChecker, exposed around 1EV under saturation. Note the highest peak is narrow and the peaks get lower and wider for the darker patches. The widening of the peaks is the noise.

Now, check this ColorChecker that is exposed around 6EV under saturation. The peaks get much wider. The lowest patches and cardboard surrounding the patches float into another.

So reducing exposure, increases noise. This part of the noise is usually dominant. Increasing ISO has no effect on photon statistics.

Photon statistics depend much on sensor size, but not so much on pixel size. How many photons a sensor can detect depends on it's surface and photodiode technology. If that number of photons are distributed ov 25MP or 100MP matters little.

Lens OTF/MTF

The illustrations here are taken from Brandon Dube's article:

https://www.lensrentals.com/blog/2017/10/the-8k-conundrum-when-bad-lenses-mount-good-sensors/

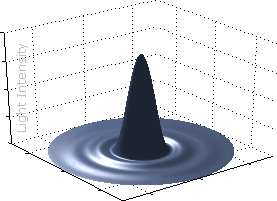

If we shot an image of a very distant small light source, like a distant star trough a perfect lens, it would look like this:

Note the central spot, surrounded by small concentric circles. The small circles are called 'Airy rings' and they are diffraction pattern caused by the aperture of the lens.

Now, lenses are not perfect, they have aberrations:

This is a lens affected by astigmatism and coma.

Optical engineers try to eliminate these aberrations. Most aberrations increase moving away from the optical axis, and they usually decrease when stopping down.

A real spot image may look like this.

Such a spot image could be called a Point Spread Function.

There is a matematical way of describing a PSF called OTF (Optical Transfer Function) that is the 'Fourier Transform' of the PSF. PSF is complex function. Normally lenses are characterized with the absolute value of the OTF. That is called MTF. Both OTF and MTF are two dimensional, but MTF is normally measured in two directions.

Let's take this image:

Two pairs of vertical bars. both equally bright.

Now blur it with a Gaussian blur of radius 5.

We see that both line pairs are blurred, but the large one still keeps contrast while the smaller and tighter one has lost much of contrast.

A mathematician would say that we have convolved the image with a Gaussian PSF having the radius of 5.

Now let's take some real test samples:

This is a small crop of decently sharp image shot on my Hasselblad 555/ELD with a 39M P45+ back at 1:1 view.

This is the same image but shot with a Softar II filter.

The wedges allow us to calculate the MTF for each combo of lens, filter and sensor:

The upper figure shows the MTF. The blue line shows the MTF of the lens and the red one the MTF of the lens + the Softar II.

So, what these curves show how much contrast is lost with varying detail, coarse detail on the left fine detail on the right. The vertical line shows the resolution limit of the sensor.

Resolution is the limit, where we can tell things apart. We can look at fine details of the small resolution chart:

These shows two crops at 200%. Should be viewed at original size below. The structures within the black frame are resolved in both images. But in the left image they are resolved with high contrast and on the left one with low contrast .

After viewing the picture at actual size we can notice that the image on the has color artifacts while the one on the right has low contrast but almost no artifacts.

In this case resolution is limited by the sensor. The MTf we measured was above zero at Nyquist that causes aliasing artifacts.

We could say that the image on the left shows a lens with high resolution and good MTF for small detail while the one the right has good resolution with poor MTF for small details.

It may be said that 'slanted wedges' here are too small and not sharp enough for good MTF calculations, but it may still be a good demo.

OLP filter

As discussed above, if the lens has to much MTF at the Nyquist limit, aliasing will occur. It may seem to accentuate perception of sharpness as it would yield false detail in what normally would be blurred. But with 'Bayer' filters in front of the sensor that false detail arises in different positions for different colors, we can get 'color moiré'.

To reduce that, cameras with large pixels mostly have an Optical Low Pass (OLP) filter. It is normally implemented using four way beam splitter:

Photograph of a point light source, without an OLP and with an OLP filter that was taken off a sensor.

The OLP filter acts as a softening filter that has most effect on the high frequencies, near Nyquist. OLP filters were optional for some early MFD cameras, but they were expensive and they reduce sharpness, so later MFD-s dropped OLP filtering. (For the Mamiya ZD, the normal IR filter was 1000$US and the OLP filter was 3000$US, I would recall)

Diffraction

Diffraction is a property of light. When light is passing through a hole a diffraction pattern emerges. Here is a nice diffraction pattern from Cambridge in Colour:

The diameter of the Airy pattern, as it is normally called, increasing with f-stop number. So, when we stop down the airy pattern gets larger.

This shows a 3.9 micron pixel grid with an airy pattern for f/5.6. It seems that this may not affect image quality, but even apertures like f/5.6 have a small effect on sharpness.

Stopping down to f/16 will have a significant effect on sharpness.

If we recall MTF, as discussed earlier, each Point Spread Function (PSF) can be approximated by an MTF. The MTF of system are the MTF of it's parts, multiplied. Each part of the system has it's own MTF.

This shows MTF of my Hasselblad Sonnar f/4 CFE at different apertures, on the P45+ back. Quite obviously f/5.6 is best of the apertures tested. Just as an example, it seems that it would outperform my Sony A7rII with my Sony 90/2.8 G at both f/5.6 and f/8. But, it would be a bit behind the Sony at f/11.

By and large, observers mostly don't note diffraction before stopping down to f/16 or f/22. A part of the explanation is that our human vision is most sensitive to low frequencies, like 600 cy/PH and it that range the effect of diffraction is not so large.

Depth of field and defocus

I would think that it is easiest to understand DoF looking at the image plane. This is the best illustration I have seen:

Here we have three objects, one beyond focus distance (1), one at focusing distance (2) and one in front of the focusing distance. All throw a converging beam behind the lens, but only beam from the object in focus converges in the focal plane. The two converge in front or behind the focal planes. So the points out of focus will be blobs or 'bokeh balls'. Normally they would be called circle of confusion (CoC). Closing the aperture cuts down on the diameter of the beam. So the points are not getting in focus, but the 'blob' or 'CoC' gets smaller.

Stopping down the aperture two stops cuts the diameter of 'blob', 'CoC' or 'bokeh ball' in half.

Now, the question arises what is sharp enough, tradition says that a 'blob' diameter of 1/1500 of the image diagonal will be regarded acceptably sharp. If we have a 24x36 mm camera it has an image diagonal of 43 mm. 43/1500 -> 0.0286 mm, that is around 0.03 mm and that figure is used in normal DoF calculations. A 33x44 mm sensor has a diameter of 55 mm so, we would end up with 55/1500 -> 0.037 mm.

But, our sensors may have 4-6 micron size! A 24 MP camera has 6 micron pixels. Our 0.03 mm CoC covers 706 square microns while a pixel is 36 square microns. So 19.6 pixels fit within that CoC. So, acceptable DoF corresponds 24/19.6 -> 1.2 MP.

Would we do the same calculation a 50 MP 44x33 sensor, the figures would be the same.

So, we may need to keep in mind that stopping down doesn't bring things into focus just making the blur smaller.

Stopping down to much, diffraction will come into play and we may need to rise ISO to stop motion,

So, what should we do?

We may need to keep things in balance. To get best image quality, it makes sense to use base ISO and expose as high as possible without burning out highlights.

We would also try to keep aperture near medium, to keep diffraction at bay.

After that we need to manage sharpness. There is only one plane of focus. Placing the main object in focus may make a lot of sense. After that we may need to stop down to get acceptable sharpness.

But stopping down to much we will lose some sharpness and perhaps we need high ISO to keep motion blur at bay.

Best regards

Erik

--

Erik Kaffehr

Website: http://echophoto.dnsalias.net

Magic uses to disappear in controlled experiments…

Gallery: http://echophoto.smugmug.com

Articles: http://echophoto.dnsalias.net/ekr/index.php/photoarticles

Last edited: