Stop shouting at me in bold all caps. Haha.

Did you do all that math with a slide rule?

Apologies for the all caps. It was only intended to draw attention and hopefully elicit a reply - not wanting to be disrespectful at all.

As to the substance of my question: should I interpret your evasive joke about the "slide rule" as proof that I hit the nail on the head, and that your claim "

I'm just looking at my image at full res (not pixel peeping)" is

impossibly contradictory? ;-)

Again: when you say that, which of the two interpretations that I proposed is the correct one?

A) you are looking at a small section of the full-res image at 100% (hence indeed "pixel peeping" ;-))

B) you are looking at a downsampled (i.e., not "f

ull res") version of the full image?

Best,

Marco

It's actually a good point.

Ultra-high-res-display look fantastic but they manage to cram so many pixels into the screen, we often times don't really appreciate the actual resolution of the photos.

Kind of explains this recent renewed pixel-lust and people wanting for more. I see the same thing happening when editing images on the 16" 2k+ display vs the desktop 27" 1440p display.

Even 150MP will look slightly underwhelming on a 6k display.

Crispy, I think you are probably a world-class pro photog in general and certainly a top pro macro shooter. But I'm not sure you are getting this whole 150-200 MP thing straight. Or, at least I don't understand it.

It's going to look incredible on future monitors and there is going to be some further image fidelity benefit.

I've been reading all your posts and tech arguments (discussions) with Jim. I'm not following well this whole argument of yours on this wall we hit past 100 MP and all this bit about having to shoot 150-200 MP at F5.6 or the IQ will be bad.

It's not about "the IQ will be bad" - it's about the effective resolution, meaning how much image data is actually available at the end of the day.

You can have a 200MP sensor in your GFX camera but unless you shoot with a wide enough aperture opening, you won't get a clear enough distinction between the image details.

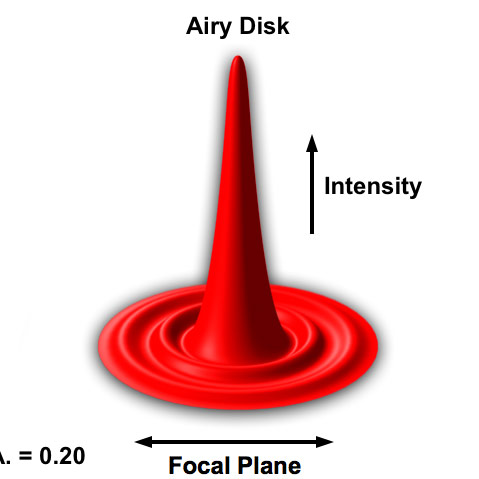

The same principle is observable in microscopes, when the Numerical Aperture does not allow for a clear enough distinction between the individual airy disks:

https://www.microscopyu.com/tutorials/imageformation-airyna

So while the resulting file has 200MP the actual image has basically the same amount of usable information as a much lower resolution image. You could reduce its pixel dimension without losing any data. It's basically a lot of "empty" pixel data, not unlike an interpolated enlarged image.

You actually provided a good example in your comparison of the 100II and the Q3, when you shot the 100II at f9 and the Q3 at f8.

The 100MP sensor will be limited by the aperture, so its effective resolution is quite a bit lower, which is why the end result is extremely similar - and also pretty close to a 50MP (though I think it's just slightly more than 50MP, perhaps 65MP?).

You might want to compare the images shot at (for example) f6.7, I'm sure you're going to see a bigger difference between the GFX 100II and Q3 then.

The point is: we're at a level where small changes in aperture will knock down your effective resolution considerably and very quickly.

Sure, if you're "lucky" enough to have some straight line or very fine structures in your image (like the staircases) you may still see some aliasing and while you may be able to reduce them with a higher resolution sensor, it won't yield more useable details.

That's all there is to it. Of course we can look at the details at 300% but as we know from Jim, Pixel Peeping is unproductive, so 3x-pixel-peeping is at least 3x as unproductive hehe